Why AI Can’t Fix a Broken Brand Strategy

Branding

13/2/2026

A broken brand strategy is often mistaken for a content problem, or a design problem, because the symptoms show up in marketing communications, sales assets, and inconsistent execution. That framing makes generative ai feel like an obvious fix: use ai tools to generate more copy, more concepts, more variations, and move faster. The risk is that speed amplifies the underlying failure, because ai systems scale outputs, not intent, and they do it using training data and next word prediction that is indifferent to the actual brand promise. If the given input to AI is weak, biased, or reflects an already broken strategy, the output will continue that trend.

This is why ai can’t fix a broken brand strategy. Artificial intelligence can help a brand team process input data, run market research faster, and draft ai generated content for review, but it cannot supply core identity, purpose, or positioning clarity. When strategy is missing, ai outputs increase noise, and ai hallucinations can produce factually incorrect details that damage factual accuracy, customer trust, and brand equity.

What a Broken Brand Strategy Actually Means

Defining brand strategy vs tactics

Brand strategy defines the long term system that governs what the brand represents, who the target audience is, what the brand promise is, and how the business creates value in a way that remains coherent over time. Tactics are execution choices, campaigns, posts, landing pages, and content that deliver on the strategy in specific contexts.

When this distinction collapses, teams treat output volume as progress. They create more messaging variants, more visual directions, and more ai generated text, but the brand’s identity does not become clearer. Strategy sets boundaries. Tactics should operate inside those boundaries, including when teams use generative artificial intelligence to generate outputs at scale.

Symptoms of brand strategy breakdown

A strategy breakdown is visible in how customers perceive the company across touchpoints. Common symptoms include inconsistent brand messaging, shifting priorities by channel, and internal disagreements about what the brand stands for. Teams may produce contradictory positioning statements, different value propositions for the same offer, or a tone that changes depending on who created the asset.

Operationally, this shows up as duplicated work, rework, and low confidence in decisions. The brand team becomes an escalation layer for disagreements rather than a source of direction. The company’s reputation becomes fragile because the market presence depends on short term reactions instead of a consistent core identity.

Brand dilution as a strategic failure

Brand dilution is often discussed as a consequence of design inconsistency, but brand dilution occurs primarily when strategic focus fails. Brand dilution happen when a brand extension or brand expansion stretches meaning faster than the organization can sustain the core brand, its perceived value, and brand integrity. Excessive brand extension, over licensing, and rapid product launches into new territory can weaken a once strong brand, especially when the existing brand is forced into unrelated product categories.

In this sense, brand dilution occurs as a strategic failure in brand management. The brand’s identity becomes unclear, loyal customers lose confidence, and brand loyalty becomes harder to defend. Prevent brand dilution by treating brand equity as a strategic asset, using brand equity models to assess whether brand growth reinforces the core brand or undermines it.

The Rise of AI in Branding — Hype vs Reality

Why generative AI seems like a shortcut

Generative ai tools look like a shortcut because they reduce the cost of producing drafts. Large language models can generate text, rewrite messaging, and produce variations for different markets in seconds. Under time pressure, this can feel like a path to clarity.

The reality is that language models are optimized for plausible continuation, not for the correct answer in a strategic sense. Even when outputs sound confident, they can be inaccurate information. The same question can yield multiple possible outcomes, especially with vague prompts or insufficient constraints, which is why teams can end up with false narratives that sound coherent but do not match brand truth. Managing these variations can prove challenging for teams trying to maintain brand consistency.

What AI can contribute to brand work

AI tools can contribute meaningfully when they are used as operational support. They can summarize academic papers and prior research, assist in conducting a comprehensive survey of relevant literature, compress a literature review, and help analysts scan internet data and web pages for themes by processing vast amounts of data to identify patterns. They can accelerate pattern detection across customer data, online conversations, social listening, and sentiment analysis, including net sentiment and negative mentions that inform brand perception risks.

AI can also support retrieval augmented generation when paired with external data and a curated knowledge base. In that workflow, the ai model is less likely to produce inaccurate claims because it is constrained by factual data pulled from external sources. This does not eliminate hallucinations, but it can reduce misleading outputs when the system is well governed.

Where AI falls short in strategic thinking

Strategic brand decisions require context, tradeoffs, and human judgement. Generative ai models can mimic strategy language, but they do not understand what the company must protect, what it can change, and what it should refuse. They cannot define purpose, calibrate ambition against business model constraints, or decide which market to prioritize when entering a new market.

This is also where the question why is generative ai bad often arises. It is not bad as a tool, but it is structurally limited as a decision maker. It optimizes for probabilistic text generation rather than coherent strategic direction.

Limitations of Artificial Intelligence in Brand Strategy

AI lacks context, cultural nuance, and purpose

Artificial intelligence operates on statistical patterns in training data. AI models rely on the relationships between words and characters, and when these patterns are misinterpreted or incomplete, it can lead to errors such as text output mistakes or hallucinations. It cannot access lived context in the way humans can, and it cannot reliably infer cultural nuance, moral boundaries, or purpose beyond pattern matching. That matters in branding because brand’s identity is not only what is said, but what is consistently chosen over time, including what is not said.

Even explainable ai does not solve this gap. Explainable ai can help interpret why a model produced a given output, but it does not supply the human meaning layer required to determine whether the output aligns with brand promise, risk posture, and customer trust requirements.

AI hallucination — what it is and why it matters

Ai hallucinations are outputs that appear plausible but are factually incorrect or unsupported. Hallucinations arise because large language models generate text based on learned probability distributions, not direct verification of truth. In operational terms, hallucination is a factual accuracy problem that can produce factual errors, incorrect information, and misleading outputs, even when the model sounds certain. According to Ji et al., as published in a natural language processing journal, hallucinations in AI-generated content can be classified into two categories: intrinsic and extrinsic hallucinations, which helps researchers better identify and address these issues.

Hallucination mitigation techniques can reduce risk, but they cannot eliminate hallucinations entirely. Common approaches include grounding generation in external data, using retrieval augmented generation, adding fact checking layers, applying confidence scores, and forcing the model to express uncertainty and acknowledging uncertainty when evidence is missing. These controls help, but they still depend on high quality input data and governance that is hard to maintain across teams.

Hallucinations in AI outputs: examples that misalign with brand truth

Hallucinations in ai outputs become brand risks when they introduce false commitments or invented facts into customer facing materials. An ai generated chatbot might provide details about a feature that does not exist, promise terms that contradict policy, or present factually incorrect comparisons to competitors. For example, a chatbot could state with "90% confidence" that a certain product feature is available, even though it is not, misleading both customers and staff. That creates false information that spreads through support tickets, sales conversations, and marketing assets.

The same risk applies to brand narratives. AI generated content can create confident positioning claims that do not match the company’s actual strengths, leading to a brand promise that the product cannot support. In high trust categories, produce inaccurate statements and unexpected results in customer interactions can trigger reputational harm, especially when screenshots circulate in the New York Times, MIT Technology Review, or other technology review coverage of misleading outputs and adversarial attacks.

Why AI Content Alone Can’t Build Brand Strategy

AI output vs brand intent

Brand intent is deliberate. It is the result of strategic choices about what the brand represents, how the company competes, and what it will consistently deliver. AI output is probabilistic. It predicts the next word based on patterns in training data, and it cannot guarantee alignment with core strategy.

This is why teams cannot treat ai outputs as strategy artifacts. Without a stable strategy, ai generated text will drift toward generic market language, and different teams will generate conflicting versions of the brand. The result is not speed, but inconsistency.

Risks of over-reliance on AI content moderation tools

Ai content moderation is useful for compliance and basic screening, but over reliance can flatten voice and remove nuance. When moderation rules are too strict or too generic, the system pushes everything toward safe sameness. That can break differentiation and weaken perceived value, especially when the brand’s personality depends on controlled specificity.

Moderation also inherits the limitations of the underlying ai model. If the moderation system is trained on incomplete datasets or biased samples, it can misclassify intent and distort tone, creating a mismatch between brand messaging and audience expectations.

Why “put AI on it” can lead to brand inconsistency

“Put ai on it” often means distributing generative ai tools across teams with minimal governance. Each function generates its own copy, claims, and framing. Even with shared prompts, the same question can yield different answers, and those variations become the brand in the market.

This is the direct path to inconsistency. The brand team loses control of standards, and the organization produces a fragmented set of messages, assets, and claims. Over time, this can contribute to brand dilution and weaken brand equity.

When AI Actually Helps Brand Work (Without Replacing Strategy)

How AI supports research, ideation, & pattern detection

AI can accelerate research by summarizing external sources, scanning web pages, and synthesizing academic papers. It can support pattern detection across customer data, online reviews, and social listening signals. It can also help teams explore ideation breadth faster, generating multiple candidate frames for human evaluation.

Used this way, ai tools reduce time spent on mechanical synthesis, and they help humans make smarter decisions. The key is that humans validate, select, and align outputs to strategy.

AI as a tool, not a strategist

AI should be treated as an amplifier of workflows, not a substitute for strategic judgement. Large language models can generate options, but they cannot determine which option is true to the brand, feasible for the business model, and credible in the market. That decision belongs to people who understand the company, its customers, and its risk constraints.

This boundary matters for governance. If ai systems are positioned as decision makers, errors become systemic. If they are positioned as assistants, errors are easier to catch, and the brand remains coherent.

Workflow integration without strategic dependency

Workflow integration should be designed so AI supports execution without becoming a strategic dependency. A practical pattern is to restrict ai generated content to draft states, require human review, and ground high risk outputs in external data pulled from a knowledge base.

For risk control, teams can combine retrieval augmented generation, confidence scores, and structured prompts that require the model to cite sources, acknowledge uncertainty, and refuse to invent details when factual data is missing. These practices reduce hallucinations, but they still require governance, training, and quality control.

How to Fix a Broken Brand Strategy (Without Relying on AI)

Start with core human-centered strategy fundamentals

Fixing strategy starts with diagnosis. Identify where customers perceive inconsistency, where teams disagree, and where decisions lack a clear filter. Use market research, stakeholder interviews, and audits of messaging and assets to map the current reality of the brand’s identity.

Then establish decision rights. Brand management needs clear ownership, a consistent approval process, and a system for updating standards. Without governance, every new asset becomes an opportunity for drift, even if AI is not involved.

Brand purpose, audience insight, positioning clarity

Purpose and positioning are not outputs, they are commitments. Clarify what the brand stands for, what it refuses, and what it promises. Build audience insight around decision context, not demographics, especially in B2B where buying is collective and risk weighted. Define positioning that can be repeated across channels without collapsing into generic language.

This is also where brand dilution prevention becomes practical. Avoid brand dilution by limiting strategic brand extension to areas that reinforce the core brand. Use brand equity models to evaluate whether a new offer strengthens meaning or creates confusion, and treat failed brand extension signals as strategic data, not as a design issue.

How strategic design and human judgement realign brand value

Strategic design translates strategy into repeatable systems. Build a design and messaging system that standardize brand messaging, define constraints, and make the on strategy choice the default choice. Pair this with human judgement, because governance requires context, cultural awareness, and accountability.

AI can support this layer, but it cannot replace it. The realignment of brand value depends on disciplined choices, consistent execution, and a strategy that remains stable enough for the market to recognize, trust, and reward.

Examples

Salesforce

Salesforce uses AI in customer facing features and internal workflows, but its brand strategy is anchored in stable positioning and a consistent narrative across products. When AI generated content is used for enablement drafts, it is governed through clear standards so outputs do not drift into false narratives or inconsistent promises.

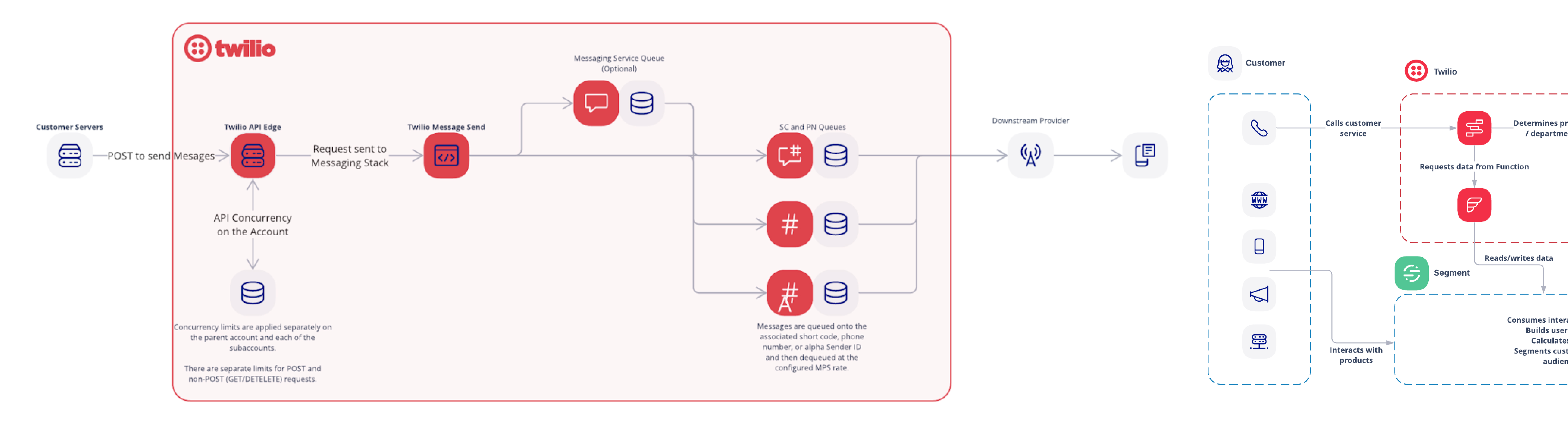

Twilio

Twilio operates in a technical category where factual accuracy is essential, and AI hallucinations can create reputational risk if they appear in documentation or product claims. The brand’s integrity depends on disciplined language and verification workflows, using external data and review steps so ai outputs do not introduce factually incorrect statements that conflict with brand truth.

FAQs

Why can’t AI fix a broken brand strategy?

Because AI generates outputs, not intent. Without a coherent strategy, AI scales inconsistency and increases the risk of misleading outputs.

What is AI hallucination and how does it impact branding?

AI hallucination is when a model produces plausible but incorrect information. In branding it can introduce false claims, damage customer trust, and weaken brand equity.

Can generative AI ever replace strategic brand thinking?

No. Generative AI can support research and drafting, but strategic choices require human context, purpose, and accountability.

How do hallucinations in AI outputs hurt brand consistency?

They create conflicting facts and promises across channels. Over time this fragments brand messaging and can contribute to brand dilution.

What are the limitations of artificial intelligence in brand strategy?

AI lacks purpose and cultural nuance, depends on training data quality, and can generate inaccurate information, especially under vague prompts or missing context.

Recent Articles

content on

-min.png)

.svg)